Chatbot queries by SDK

With the AI Hub software development kit (SDK), you can write a Python script to interact with an AI Hub chatbot programmatically instead of by making direct API calls.

This guide includes a complete script to use as a model when writing scripts for interacting with chatbots.

Before you begin

Review the developer quickstart and then install the AI Hub SDK.

Chatbot queries are asynchronous

Getting a reply to a chatbot query is an asynchronous operation, meaning that it involves multiple requests:

-

Send your query to the chatbot and get a query ID in response.

-

Use the query ID to check the chatbot’s progress at processing the query.

-

When the chatbot finishes processing, use the query ID to retrieve the chatbot’s reply.

Import modules and initialize the client

The first step of using the SDK is to import key Python modules.

Next, initialize a client that lets you interact with the AI Hub API. For information on what values to use for the parameters, see the Developer quickstart.

Provide the chatbot ID

Identify the chatbot to query by providing its unique ID in a Python dictionary.

For chatbot queries, the type key must have the string CHATBOT as its value.

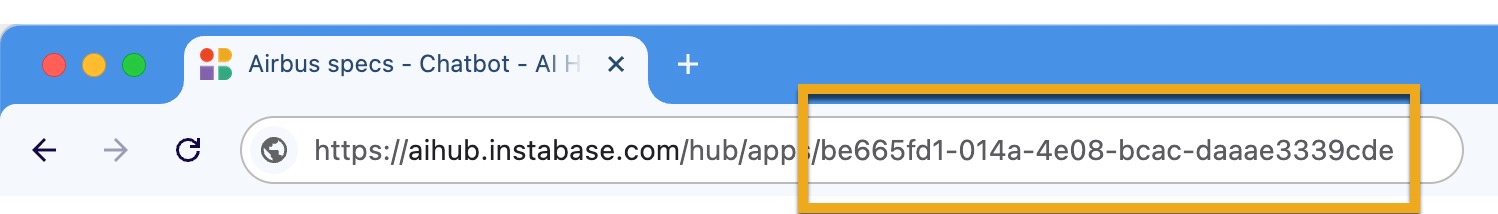

The value for <CHATBOT-ID> is in the chatbot URL. The chatbot ID is the 36-character string at the end of the URL, excluding the slash at the beginning of the string.

To find the chatbot’s URL, navigate to Hub and run the chatbot you want to send queries to.

Send a query to the chatbot

You’re ready to send a query to the chatbot. It can handle any query that you might use with the graphical user interface, except for requests for graphs.

As part of the query, you can tell the chatbot which model to use when coming up with a reply.

You also need to specify whether to include information on which of the chatbot’s knowledge base documents contributed to its reply.

User-defined values

Check the query processing status

After you submit the query, repeatedly check the query’s status with client.queries.status(<QUERY-ID>).status so you can tell when a response is ready. Three statuses are possible:

-

RUNNING- the chatbot is still processing the query. -

COMPLETE- the chatbot has finished processing the query and has a reply. -

FAILED- something went wrong. Try resubmitting the query.

Parse the reply

When a response’s status is COMPLETE you can extract the chatbot’s reply from the response.

If you asked for source information when submitting your query, you can also extract details about which documents contributed to the reply.

FAILED status. You must figure out the best way to handle that situation in your workflow.Complete script

This script demonstrates a complete, end-to-end workflow using the AI Hub SDK. It combines all the snippets from above.

The script performs these tasks:

-

Import key modules and initialize the API client.

-

Send an asynchronous query to a chatbot.

-

Repeatedly check the query status.

-

Print the answer and source information.