Running accuracy tests

Accuracy tests compare run results against ground truth values to measure performance and identify areas for improvement.

Before you begin

You must have one or more ground truth datasets for the app you want to test.-

From the Hub, open the app you want to test.

-

Select the Accuracy tests tab.

-

Click Test accuracy.

-

Select the app version and ground truth dataset you want to use for testing, then click Next.

-

(Optional) Select the Configuration tab and modify dataset parameters as needed.

Configuration changes that you make for any given accuracy test are saved to the selected ground truth dataset for future tests. -

In the Test accuracy window, click Run test.

An app run begins using files in the ground truth dataset you selected. Run results are compared to the ground truth values to generate accuracy metrics.

Viewing accuracy tests

The Accuracy tests tab for each app displays a list of accuracy tests run against all app versions. The page summarizes key accuracy metrics for each test.

To see a full accuracy report, click any completed accuracy test.

Accuracy reports include the testing metadata and key metrics shown on the accuracy test page. The report also includes a snapshot of the associated ground truth dataset at the time the accuracy test ran, providing visibility into the exact data used for the test.

Project overview metrics provide a high-level summary of classification and extraction validity and accuracy. Similarly, the Summary by class section reports classification and extraction validity and accuracy by class.

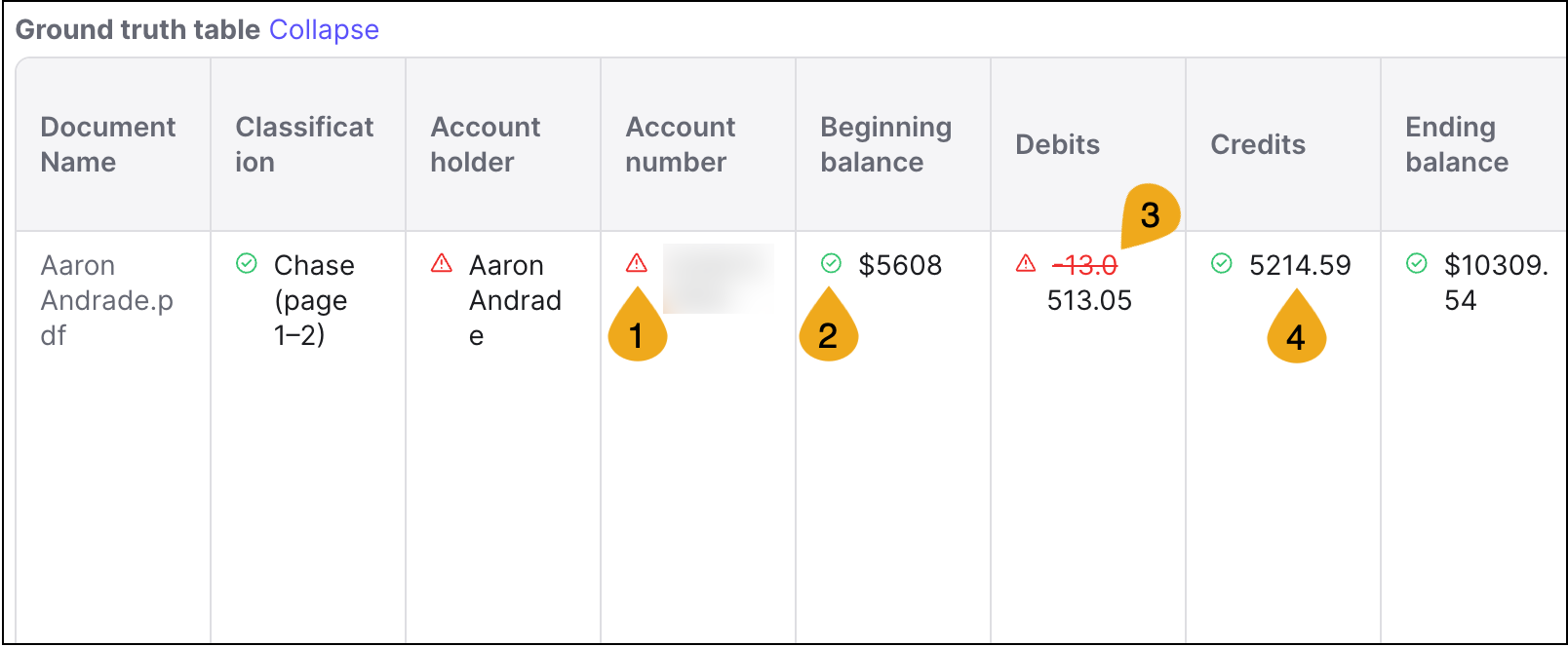

Extraction details breaks down accuracy metrics for individual fields within each class. Use this section to gain a better understanding of project and class summary metrics. You can expand the Ground truth table to drill into results for each field. The ground truth table reports classification and extraction results in each table cell. Validation results are indicated with icons and ground truth values are displayed when they differ from the run results.

-

Result failed validation, indicated by a red error icon.

-

Result passed validation, indicated by a green checkmark.

-

Result didn’t match ground truth value, indicated in red strikethrough text with the ground truth value reported below.

-

Result matched ground truth value, indicated in standard text.

To see a full-screen comparison of the ground truth value and the run result, hover over the result in any cell and click the view icon . This full-screen comparison is helpful to review tables and longer-form results. From this view, you can also set the run result as the new ground truth value if needed.

🎓 Visual tutorial: Running and viewing accuracy tests

Comparing accuracy tests

Compare two accuracy tests to see how results changed between app versions or AI runtime versions.

To compare accuracy tests, click Compare tests then select two tests to compare. For an accurate comparison, select tests that use the same ground truth dataset.

Accuracy comparison reports include overview metrics as well as results for each class and field. Trend arrows and percentages indicate the direction and magnitude of change between the two tests.

Accuracy metrics

Accuracy metrics measure how valid and how accurate app results are.

Automation rate indicates the percent of classes or fields that are processed without human intervention, either because they pass validation or have no validation rules. While higher automation rates generally indicate efficiency, they can be misleading in apps with no or few validations.

Validated accuracy indicates the percent of automated classes or fields that match the ground truth dataset. A higher validated accuracy means that results are both valid, as measured by validation rules, and accurate, as measured by ground truth values.

Raw accuracy indicates the percent of classes or fields that match corresponding ground truth values. This metric measures classification or extraction accuracy only, without factoring in validations. Raw accuracy is particularly helpful when it differs significantly in either direction from automation rate. For example, if automation rate is low but raw accuracy is high, this suggests accurate run results but overly strict validation rules.