Interact with chatbots by SDK

The queries endpoint lets you integrate AI Hub chatbots into any workflow. You can send asynchronous queries, track their status, and retrieve replies.

This guide explains how to use the queries endpoint with the Python SDK. Step-by-step code snippets and a complete script provide a reference for writing your own scripts to interact with chatbots.

This guide focuses on using the Python SDK to interact with the queries endpoint. See the Interact with chatbots by API guide to learn how to use the API and any language to do the same.

Import modules and initialize the API client

Import key Python modules.

Initialize a client that lets you interact with the API through Python objects and methods.

Parameter reference

Provide the chatbot ID

Identify the chatbot to query by storing its ID in a Python dictionary.

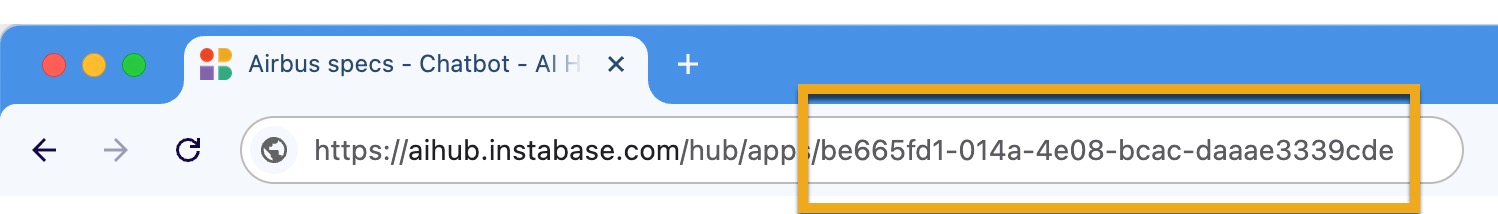

The value for <CHATBOT-ID> is in the chatbot URL. The chatbot ID is the 36-character string at the end of the URL, excluding the slash at the beginning of the string.

To find the chatbot’s URL, navigate to Hub and run the chatbot you want to send queries to.

Send a query to the chatbot

You’re ready to send a query to the chatbot. It can handle any query you might use with the graphical user interface, except for requests for graphs.

The query can include these optional settings:

-

Which model to use when generating a reply.

-

Whether to include details about the chatbot knowledge base documents that contributed to the reply.

The response includes a query ID, which you include in future requests to check the status of the query.

Parameter reference

Repeatedly check the query processing status

After you submit the query, discover when a reply is ready by repeatedly checking the query’s status:

-

RUNNING- The chatbot is still processing the query. -

COMPLETE- The chatbot finished processing the query and has a reply. -

FAILED- Something went wrong. Resubmit the query.

Print the reply and source information

When the query status is COMPLETE, you can extract the chatbot’s reply from the response.

If you asked for source information when submitting your query, you can also extract details about which documents contributed to the reply.

FAILED query status. You must figure out the best way to handle that situation in your workflow.Complete script

This script combines all the snippets from above and contains all the code needed to interact with a chatbot using the SDK.

The script performs these tasks:

-

Imports key Python modules and initialize the API client object.

-

Sends a query to the chatbot.

-

Repeatedly checks the query processing status.

-

Prints the reply and source information.